Transformer Based Text-to-Speech Model (and commentary)

Introduction

I first encountered text-to-speech synthesis from an engineering perspective in the classroom when I tried to do my own implementation of Tacotron 2 [1], which at its debut had competitive performance. I did not present a great project, although I learned a lot from my mistakes, and I think that’s what is important. Additionally I think there are a lot of good design choices for machine learning enthusiasts to learn from this experiment, even if RNN (or LSTM to be specific) based architectures are not the most competitively performing models today.

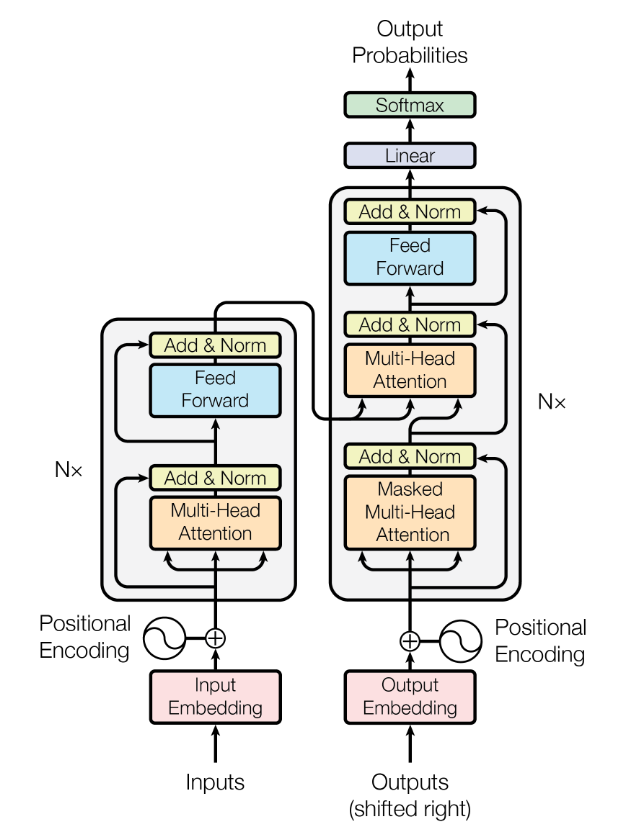

I decided to tackle the problem of text-to-speech synthesis again, this time with a transformer based architecture, to familiarize myself with the breakthrough architecture originally debuted in “Attention Is All You Need” [2] and then adapted for speech synthesis in “Neural Speech Synthesis with Transformer Network” [3]. This is a toy implementation so some things have been simplified, but I think text-to-speech models as a project, combined with the relevant reading, encourages a thorough understanding of machine learning principles. This is what I feel I’ve gained from the project, and I hope to share at least some insights in this post. This is an initial post that may be updated in the future or accompanied by subsequent posts.

Image 1: The transformer model described in “Attention Is All You Need”

Architecture

The architecture is transformer based and closely resembles the model in “Neural Speech Synthesis with Transformer Network” [3]. I used a character-level tokenizer instead of a phoneme-based tokenizer, which will reduce the quality some by reducing the dimensionality of the tokens and having tokens less aligned with natural speech utterances, but it’s not significant enough for me to build a phoneme level tokenizer and test that as well. I did some testing with the G2p-en tokenizer and it worked great but I could not figure out the best way to handle importing and saving the relevant objects without building something more complicated, so I just stuck with character level encoding. I also used two small fully-connected layers with residual connections as a decoder post-net. The is shown in Tacotron 2 [1] to improve the quality by acting as “fine-tuning” of the decoder output to more closely resemble the target.

Not shown in the diagram is the process for creating the spectrogram. I used torchaudio to keep everything aligned to one platform, although I had used librosa earlier in development with equal success. While it is helpful to know from a sound and signal engineering standpoint about short-time Fourier transforms (STFT) and how spectrograms are made, the crucial understanding is this: the spectrogram is a frequency-time-magnitude representation of a signal that behaves and for our purposes is an image. Much of the data in the original spectrogram is too small to have meaningful shapes in an image, so all of the preprocessing done is to “blow-up” the image so that the model has more interesting things to learn. It’s not actually significant what representation the data is in, as long as it is coherent and has not lost any significant amount of data.

I find it helpful to illustrate the concept of semantic meaning in an embedded space. I will do so in another post. [Talk about Word2Vec and how one piece of “Ground Truth” information gets diluted through observation -> discretization -> digitization -> probabilistic interpretation -> superposition of constructed or forced information (positional encoding)

Challenges

Training via transformers with masking is not “truly” auto regressive, or rather it has 100% teacher forcing, so the training loop is significantly different from the inference method.

There was a learning curve to understand the audio pre-processing required

Design choices about where to do various amounts of processing. I chose to do the bulk of audio processing up front and serialize it into a .pkl file so that during training it would be stored in lower tier memory for faster access and didn’t have to keep track of everything required to process spectrograms.

I changed my choice of dependent modules or design choices too often, which led to this project taking slightly longer than it otherwise would have. Most of my development in class has been done in .ipynb files in Google Colab or similar. I am still practicing to improve my housekeeping and documentation efforts.

Unit testing was used and extremely helpful but much of it was discarded after design changes due to lack of development time. It would be better and more robust to include it.